Computational Controls of Accelerators (CDL4)

Complex control processes in large-scale plants, for example for manufacturing of products or for conducting scientific experiments, are operated using a multi-layered, highly distributed hardware-software architecture. Due to a large number of sensors, control units, and other infrastructure, large amounts of data are generated. The underlying physical processes require handling data at high speed for control purposes. Configuration and operation are based on extensive expert knowledge. However, currently, only a small part of the available data can be processed and used purposefully.

Taking the control and regulation system of the European XFEL (EuXFEL) and possible extensions as examples, the CDL Computational Control of Accelerators considers process control as a "big data" problem and develops novel approaches for optimization, diagnosis, control, and maintenance. The objectives are, for example, to increase the precision of scientific instruments and the availability of the overall system.

In the case of EuXFEL, the computer infrastructure is distributed over 3 kilometers and consists of 300 computing racks that generate up to 30 TByte of raw data per day. Each computing rack itself consists of numerous microcontrollers for data acquisition and actuator control. Above this front-end layer, a middle layer works mainly in software that is managing the higher-level control. At the application level, user data, for example from imaging sensors, are finally evaluated and used.

Taking the control and regulation system of the European XFEL (EuXFEL) and possible extensions as examples, the CDL Computational Control of Accelerators considers process control as a "big data" problem and develops novel approaches for optimization, diagnosis, control, and maintenance. The objectives are, for example, to increase the precision of scientific instruments and the availability of the overall system.

In the case of EuXFEL, the computer infrastructure is distributed over 3 kilometers and consists of 300 computing racks that generate up to 30 TByte of raw data per day. Each computing rack itself consists of numerous microcontrollers for data acquisition and actuator control. Above this front-end layer, a middle layer works mainly in software that is managing the higher-level control. At the application level, user data, for example from imaging sensors, are finally evaluated and used.

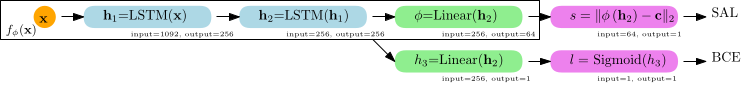

For control, data must be processed in real-time. A complete view can only be achieved through efficient decentralized preprocessing. At the same time, reaction latencies of less than tens of microseconds must be achieved and therefore control and diagnostics cannot be performed with conventional off-the-shelf hardware. CDL4 evaluates and develops specialized hardware, which achieves this time constraint by using Field Programmable Gate Arrays (FPGA) as highly customizable computation hardware. Furthermore, the current progress in machine learning techniques provides further insights and with the power of these algorithms, we are able to improve over state-of-the-art algorithms that adopt to varying operating conditions by learning from existing data.